What Is Tokenization In Payments %f0%9f%94%90 Card Security In 60 Seconds Tokenization Securepayments Fin

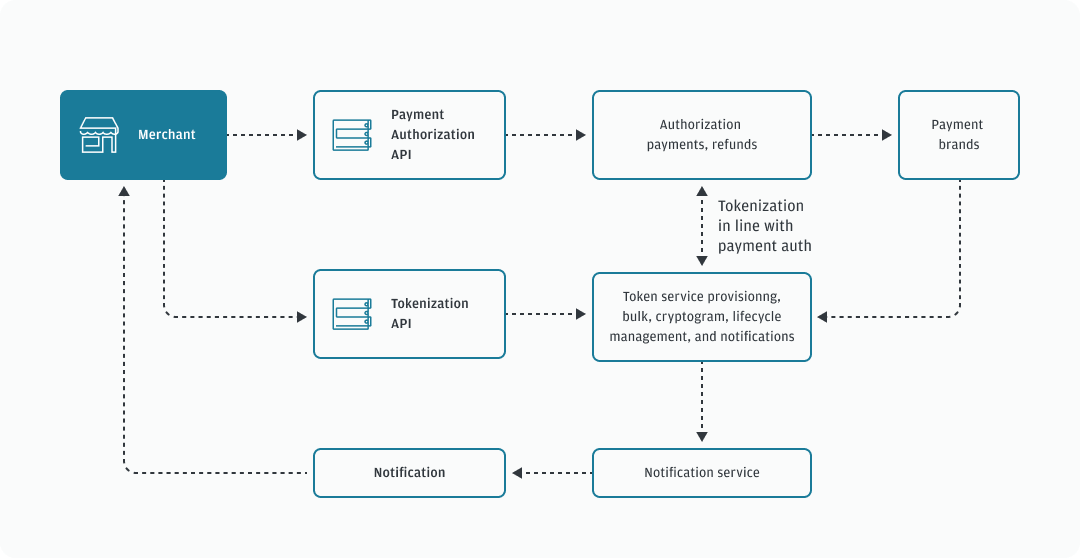

Payment Card Tokenization Payments Cards Mobile To protect data over its full lifecycle, tokenization is often combined with end to end encryption to secure data in transit to the tokenization system or service, with a token replacing the original data on return. Tokenization is the process of creating a digital representation of a real thing. tokenization can also be used to protect sensitive data or to efficiently process large amounts of data.

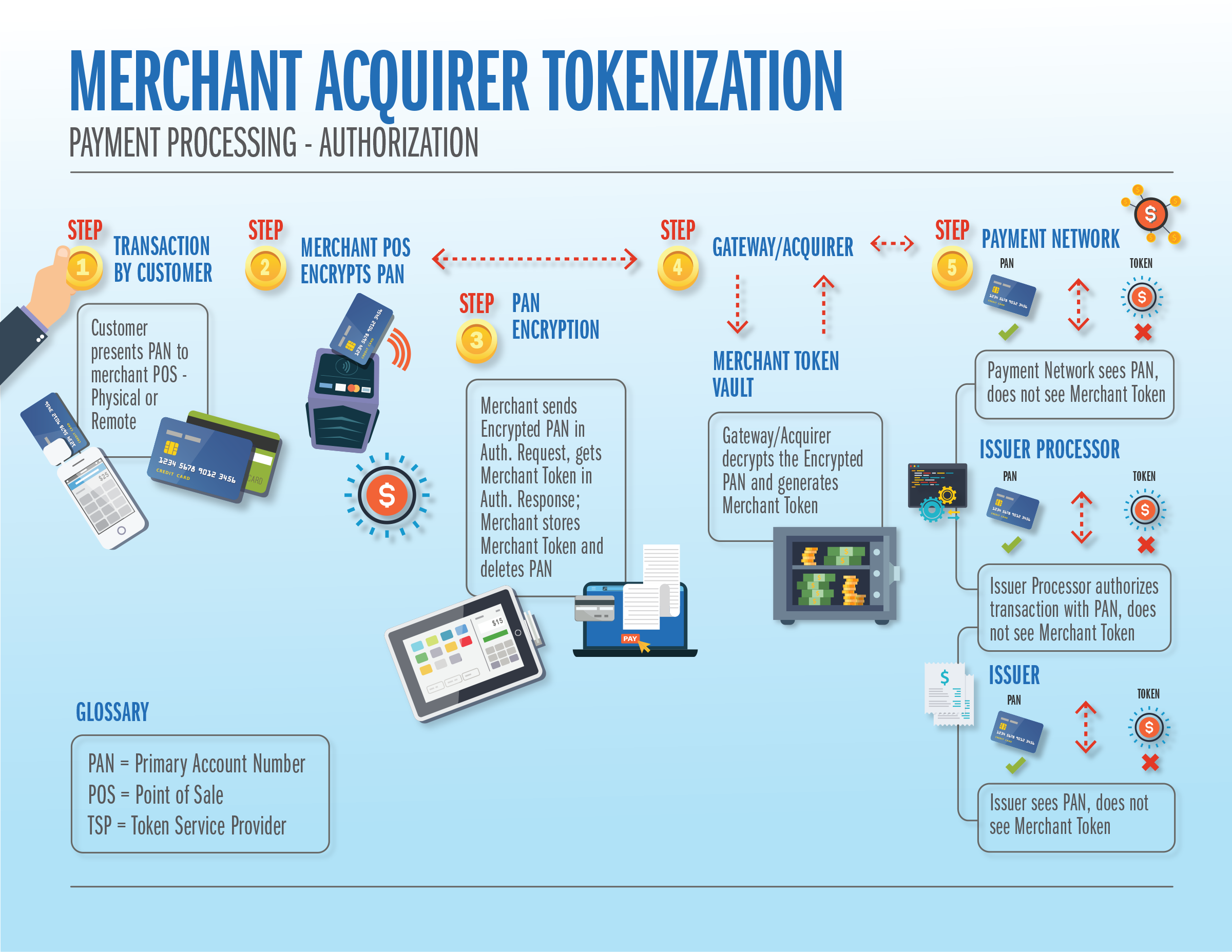

Tokenization Process Novopayment In data security, tokenization is the process of converting sensitive data into a nonsensitive digital replacement, called a token, that maps back to the original. tokenization can help protect sensitive information. for example, sensitive data can be mapped to a token and placed in a digital vault for secure storage. Protect sensitive data with tokenization. learn how data tokenization works, its benefits, real world examples, and how to implement it for security and compliance. Tokenization has long been a buzzword for crypto enthusiasts, who have been arguing for years that blockchain based assets will change the underlying infrastructure of financial markets. Data tokenization as a broad term is the process of replacing raw data with a digital representation. in data security, tokenization replaces sensitive data with randomized, nonsensitive substitutes, called tokens, that have no traceable relationship back to the original data.

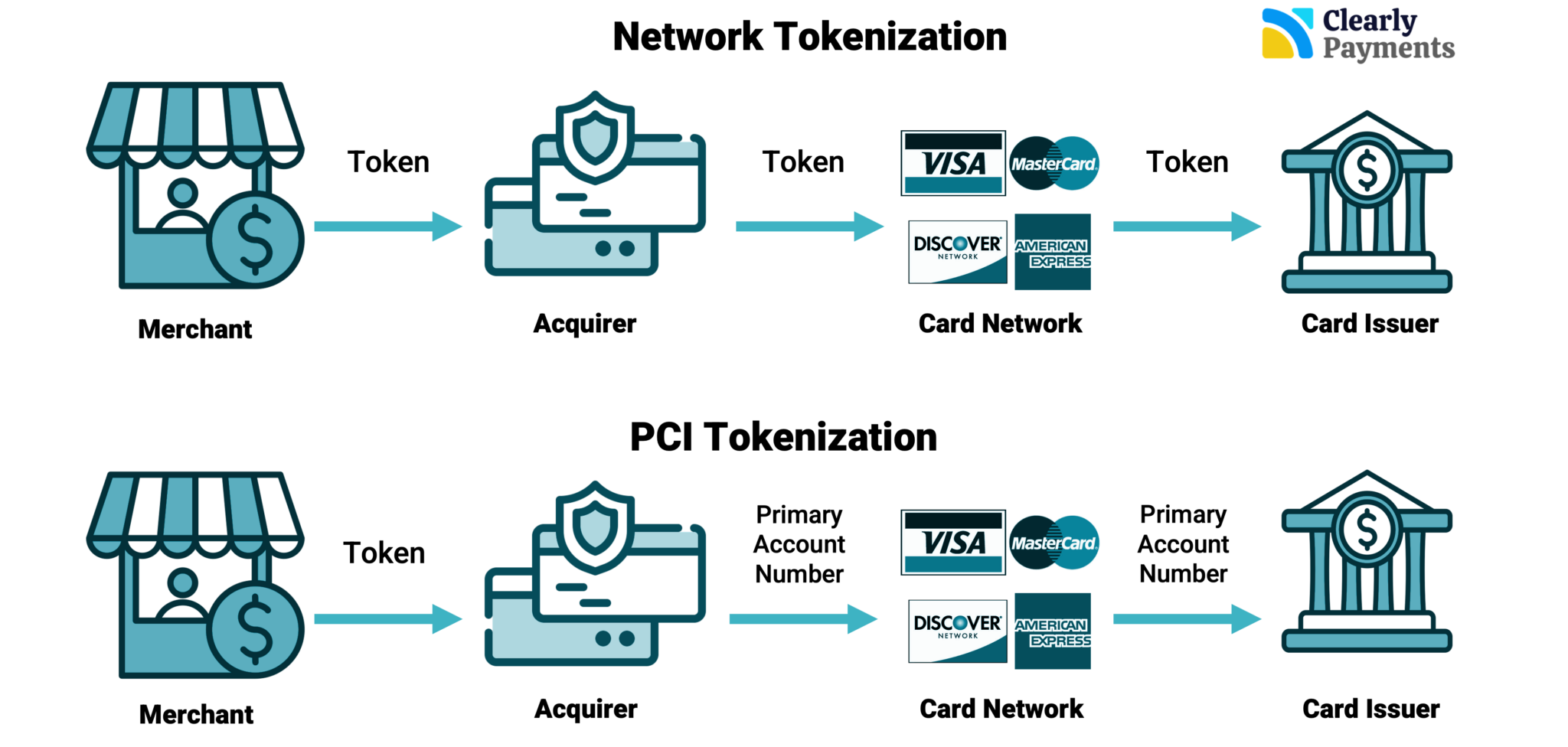

Know Your Payments Tokenization Tokenization has long been a buzzword for crypto enthusiasts, who have been arguing for years that blockchain based assets will change the underlying infrastructure of financial markets. Data tokenization as a broad term is the process of replacing raw data with a digital representation. in data security, tokenization replaces sensitive data with randomized, nonsensitive substitutes, called tokens, that have no traceable relationship back to the original data. What is tokenization? tokenization is the process of replacing sensitive, confidential data with non valuable tokens. a token itself holds no intrinsic value or meaning outside of its intended system and, without proper authorization, cannot be used to access the data it shields. Tokenization refers to the process of representing real world assets (rwa) on the blockchain using cryptocurrency tokens. fine art, company stocks, and even intangible assets like intellectual property are just some examples of what things can exist on the blockchain through tokenization. Tokenization is defined as the process of hiding the contents of a dataset by replacing sensitive or private elements with a series of non sensitive, randomly generated elements (called a token) such that the link between the token values and real values cannot be reverse engineered. Tokenization converts real‑world assets like cash or treasuries into blockchain tokens, enabling global, 24‑7 access and automated financial services. tokenization may sound technical, but it.

Know Your Payments Tokenization What is tokenization? tokenization is the process of replacing sensitive, confidential data with non valuable tokens. a token itself holds no intrinsic value or meaning outside of its intended system and, without proper authorization, cannot be used to access the data it shields. Tokenization refers to the process of representing real world assets (rwa) on the blockchain using cryptocurrency tokens. fine art, company stocks, and even intangible assets like intellectual property are just some examples of what things can exist on the blockchain through tokenization. Tokenization is defined as the process of hiding the contents of a dataset by replacing sensitive or private elements with a series of non sensitive, randomly generated elements (called a token) such that the link between the token values and real values cannot be reverse engineered. Tokenization converts real‑world assets like cash or treasuries into blockchain tokens, enabling global, 24‑7 access and automated financial services. tokenization may sound technical, but it.

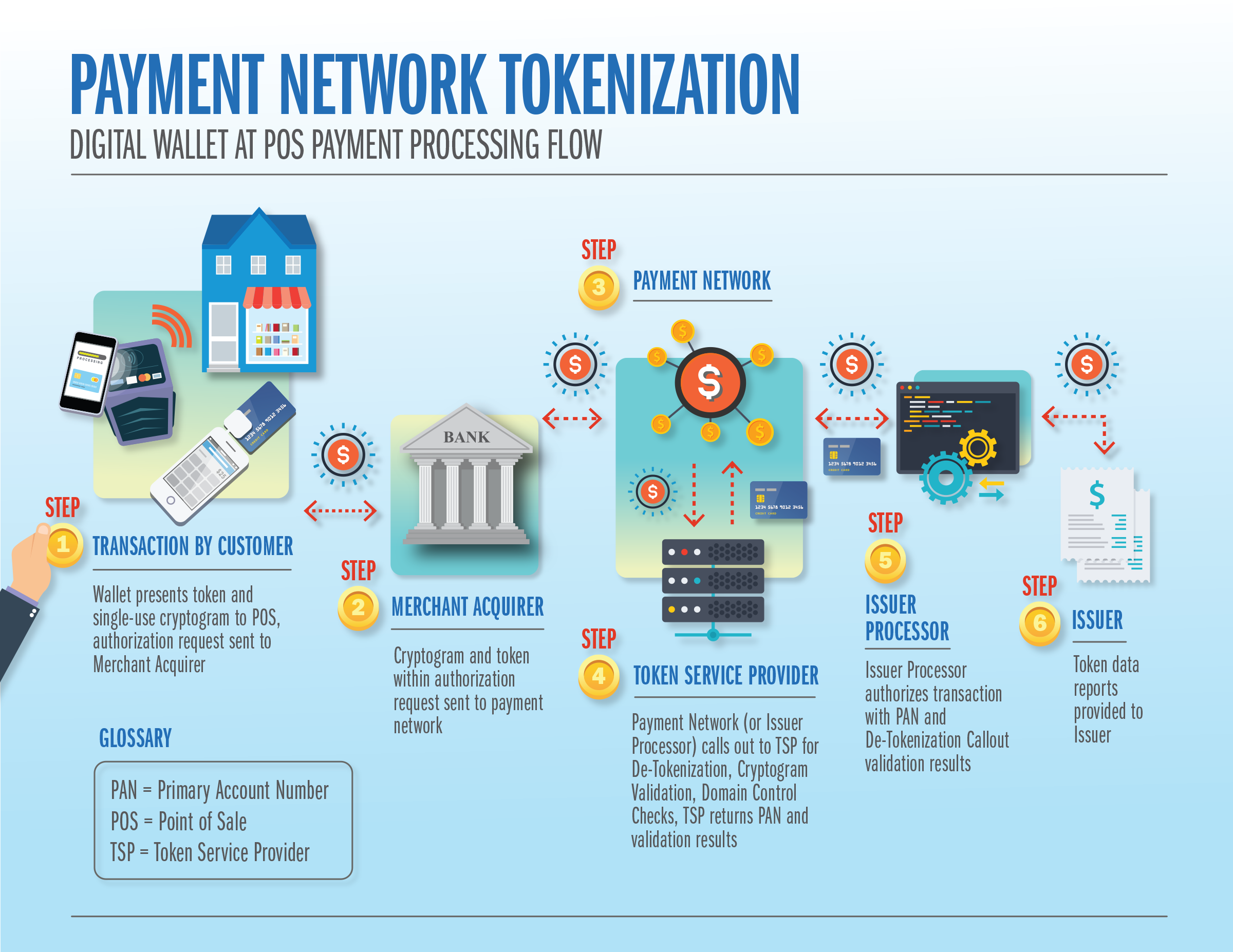

Network Tokenization Payments Developer Portal Tokenization is defined as the process of hiding the contents of a dataset by replacing sensitive or private elements with a series of non sensitive, randomly generated elements (called a token) such that the link between the token values and real values cannot be reverse engineered. Tokenization converts real‑world assets like cash or treasuries into blockchain tokens, enabling global, 24‑7 access and automated financial services. tokenization may sound technical, but it.

Network Tokenization Vs Pci Tokenization In Payments Credit Card

Comments are closed.