An Overview Of Tokenization In Data Security

Tokenization For Improved Data Security Overview Of Security Tokenization is defined as the process of hiding the contents of a dataset by replacing sensitive or private elements with a series of non sensitive, randomly generated elements (called a token) such that the link between the token values and real values cannot be reverse engineered. Tokenization is a data security technique that replaces sensitive information—such as personally identifiable information (pii), payment card numbers, or health records—with a non sensitive placeholder called a token.

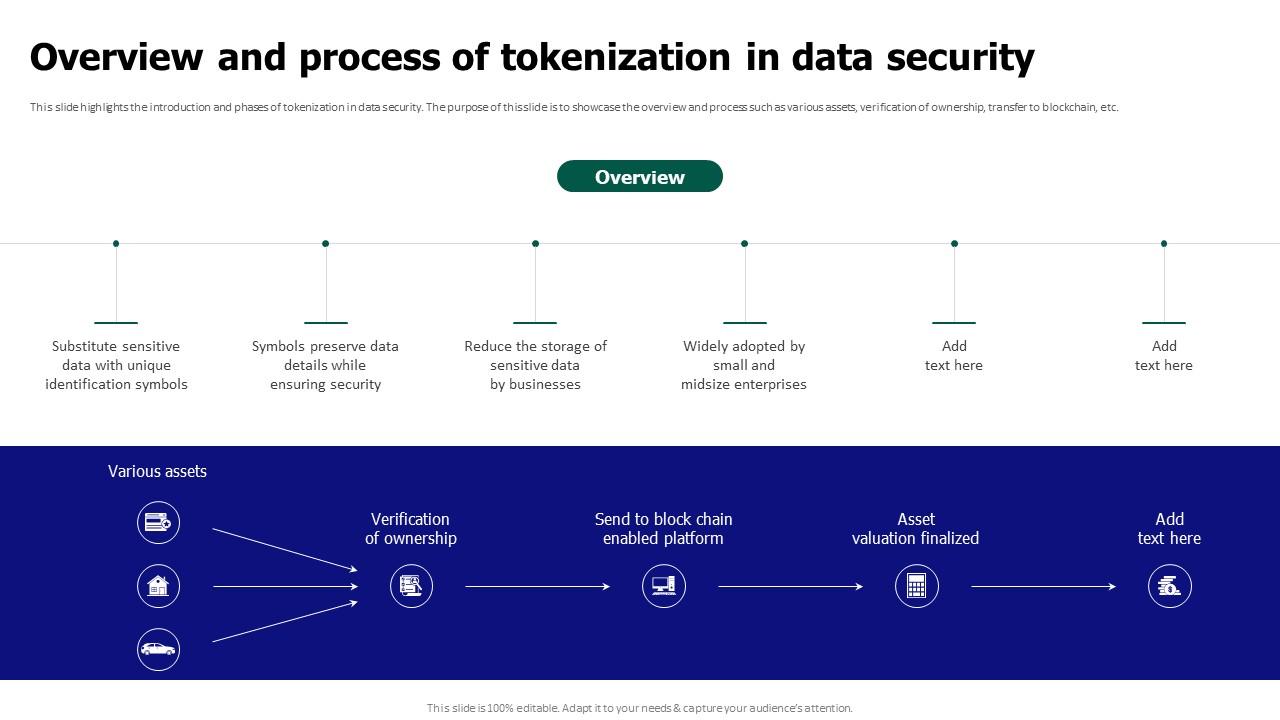

An Overview Of Tokenization In Data Security Data tokenization is a data security process that replaces sensitive data with a non sensitive value, called a token. tokens can be random numbers, strings of characters, or any other non identifiable value. when sensitive data is tokenized, the original data is stored securely in a token vault. As we delve deeper into the concept of tokenization in data security, we will explore its process, benefits, and the differences between tokenization and encryption. This article serves beginners, developers, and businesses looking to enhance data security by explaining how tokenization frameworks work, key benefits, and practical implementation tips. As businesses navigate an increasingly challenging and complex web of privacy regulations, data tokenization – a method that replaces sensitive data with non sensitive placeholders, offering enhanced security without compromising usability – looks set to be the future of digital security.

Tokenization For Improved Data Security Overview And Process Of This article serves beginners, developers, and businesses looking to enhance data security by explaining how tokenization frameworks work, key benefits, and practical implementation tips. As businesses navigate an increasingly challenging and complex web of privacy regulations, data tokenization – a method that replaces sensitive data with non sensitive placeholders, offering enhanced security without compromising usability – looks set to be the future of digital security. Encryption locks your data in a vault, but tokenization empties the vault entirely. discover how this powerful security method protects your business by replacing sensitive customer data with useless tokens, simplifying compliance and neutralizing the threat of data breaches. In this article, we will break down how data tokenization works, explore its key benefits over traditional security measures, and examine why it has become an essential component of modern cybersecurity strategies. Dentifiers, or tokens, maintaining referential integrity without revealing the data itself. unlike encryption, which obscures data with a reversible algorithm, or masking, which partially hides data, tokenization completely de.

Comments are closed.